Indigenous Advancement Strategy Evaluation Framework

This Evaluation Framework is a guide for evaluation of programs and activities under the Indigenous Advancement Strategy (IAS), delivered by the National Indigenous Australians Agency The Evaluation Framework is intended to align with the wider role of the Productivity Commission in overseeing the development and implementation of a whole of government evaluation strategy of policies and programs that effect Indigenous Australians.

Scope

This Evaluation Framework is a guide for evaluation of programs and activities under the Indigenous Advancement Strategy (IAS), delivered by the National Indigenous Australians Agency (NIAA). The Evaluation Framework is intended to align with the wider role of the Productivity Commission in overseeing the development and implementation of a whole of government evaluation strategy of policies and programs that effect Indigenous Australians [1].

This Evaluation Framework guides the conduct and development of a stronger approach to evaluation. The goals of this Framework are to:

- generate high quality evidence that is used to inform decision making,

- strengthen Indigenous leadership in evaluation,

- build capability by fostering a collaborative culture of evaluative thinking and continuous learning,

- emphasise collaboration and ethical ways of doing high quality evaluation at the forefront of evaluation practice in order to inform decision making, and

- promote dialogue and deliberation to further develop the maturity of evaluation over time.

Purpose

The Evaluation Framework is designed to ensure that evaluation is high quality, ethical, inclusive and focused on improving outcomes for Indigenous Australians. This recognises that where evaluation is of high quality it is more likely to be used. It aims to pursue consistent standards of evaluation of Indigenous Advancement Strategy (IAS) programs but not impose a ‘one-size-fits-all’ model of evaluation.

The core values at the heart of this Framework drive fundamental questions about how well IAS programs and activities build on strengths to make a positive contribution to the lives of Aboriginal and Torres Strait Islander peoples and inform our policies and programs.

The Framework sets out best practice principles that call for evaluations to be relevant, robust, appropriate and credible. High quality evaluation should be integrated into the cycles of policy and community decision-making. This should be collaborative, timely and culturally inclusive.

Our approach to evaluation reflects a strong commitment to working with Indigenous Australians. Driven by the core values of the Framework, our collaborative efforts centre on recognising the strengths of Aboriginal and Torres Strait Islander peoples, communities and cultures.

Fostering leadership and bringing the diverse perspectives of Indigenous Australians into evaluation processes helps ensure the relevance, credibility and usefulness of evaluation findings.

In evaluation, this means we value the involvement of Indigenous Australian evaluators in conducting all forms of evaluation, particularly using participatory methods that grow our mutual understanding.

Performance monitoring, review, evaluation and audit are critical parts of the policy process. They provide assurance that programs and activities are delivering outcomes as intended, help inform future policy design and support decision-making by government and communities. This Evaluation Framework builds on existing approaches to performance and evaluation under the IAS and supports NIAA’s commitment to performance measurement as required under the Public Governance, Performance and Accountability (PGPA) Act 2013.

Generating Evidence

Monitoring and evaluation in NIAA is guided by the performance reporting requirements under the Public Governance, Performance and Accountability (PGPA) Act 2013. Monitoring and evaluation systems have complementary roles and purposes in evidence generation.

Performance is described in the PGPA Act as the extent to which an activity achieves its intended purpose. In making this assessment, it is important to establish a shared understanding of the purpose of an activity and how it is intended to work. A commitment to working collaboratively is essential in the Indigenous Affairs context.

Developing a clear theory of change helps to clarify purpose and determine what information is needed to assess performance. Without a sound understanding of purpose, it will not be possible to determine what results and impacts have been achieved.

Orienting monitoring and evaluation towards impact shifts the focus from outputs and indicators towards impact related questions. This shift provides timely data to guide adaptive management; and it supports collective sense-making of data (Peersman et al. 2016).

Evaluation

Evaluation is the practice of systematic measurement of the significance, merit and worth of policies and programs, undertaken to understand and improve decisions about investment. Evaluation involves the assessment of outcomes and operations of programs or policy compared to expectations, in order to make improvements (Weiss 1998).

We apply this definition of evaluation as systematic assessment that supports Indigenous Australians, communities and government to understand what is working, or what is not working, and why. To do this the Framework requires evaluations to assess programs and activities against a set of core values. These core values are intended to help support evaluations under the Indigenous Advancement Strategy (IAS) to take into account the importance of aspirations held by Indigenous Australians.

Systematic assessment uses formal evaluation logic. This in-depth reasoning aims to provide credible and defensible evidence, which is used to inform decisions and highlight important lessons.

Good evaluation is planned from the start, and provides feedback along the way (ANAO 2014). Good evaluation is systematic, defensible, credible and unbiased. It is respectful of diverse voices and world views. To do this the Framework requires all evaluations under the IAS to build in appropriate processes for collaborating with Indigenous Australians.

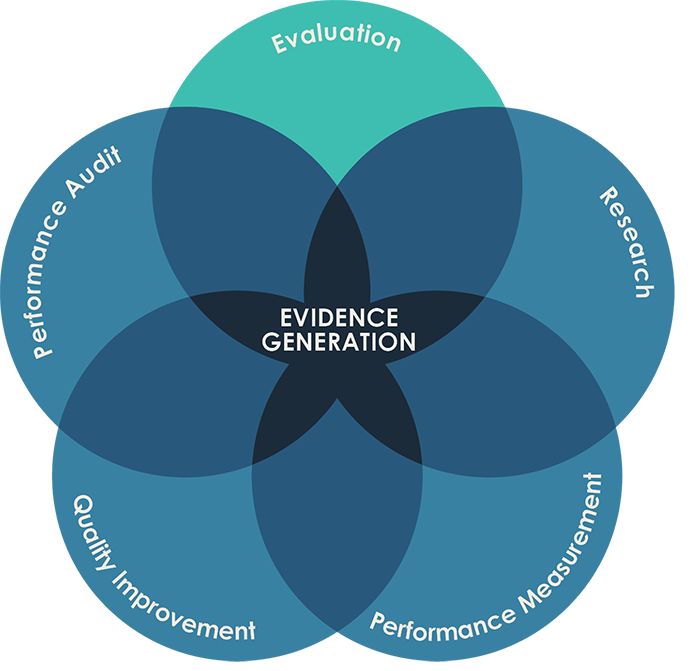

Evaluation is distinct from but related to monitoring and performance reviews. Evaluation may use data gathered in monitoring as one source of evidence, while information obtained through monitoring and performance reviews may help inform evaluation priorities (see Figure 1).

The Evaluation Framework supports an impact-oriented evaluation system, intended to generate and use evidence of the links between funded activities under the IAS and how these translate to improved outcomes for Indigenous Australians.

The Evaluation Framework focuses effort on delivering high quality, robust evaluations determined through a prioritisation process, which prioritises understanding impact.

Impact evaluation provides information about the difference produced by an activity, and assists in measuring and improving performance.

Monitoring

Monitoring is the regular and continuous review of activities. Monitoring provides useful insights into operational effectiveness and efficiency of funded activities. Monitoring and grant activity reviews are core business and should feed into continuous quality improvement cycles.

A Performance Framework complements the Evaluation Framework, by covering the monitoring and performance measurement components of the system. The Performance Framework guides the collection and reporting of routinely collected key performance indicators that are linked to the objectives of the IAS. It covers monitoring of all IAS grants. The Performance Framework also covers targeted grant activity reviews, designed to support continual quality improvement in service delivery.

Together, the Evaluation Framework and Performance Framework operate in tandem, to guide the conduct of monitoring and evaluation of the IAS.

Figure 1: Different types of evidence inform evaluation practice (Lovato & Hutchinson 2017)

A Principles-based Framework

This Framework outlines standards to guide a consistent approach to all evaluation activity.

Our standards include a set of core values and best practice principles, which aim to ensure evaluation is high quality and therefore of use in cycles of policy and community decision-making.

Core values

Central to the Evaluation Framework is a commitment to working collaboratively; recognising the strengths of Aboriginal and Torres Strait Islander people, communities and cultures are integral to evaluation in the Indigenous Affairs policy context.

All evaluations of IAS programs and activity will address three core values. The values provide a consistent reference point about appropriateness, which help decision-makers better understand the merit, worth and significance of policies and programs across the IAS. All evaluations will test the extent to which IAS programs and activities:

- build on strengths to make a positive contribution to the lives of current and future generations of Indigenous Australians

- are designed and delivered in collaboration with Indigenous Australians, ensuring diverse voices are heard and respected, and

- demonstrate cultural respect towards Indigenous Australians.

These core values reflect the significant role of the strengths of Indigenous people in generating effective policy and programs.

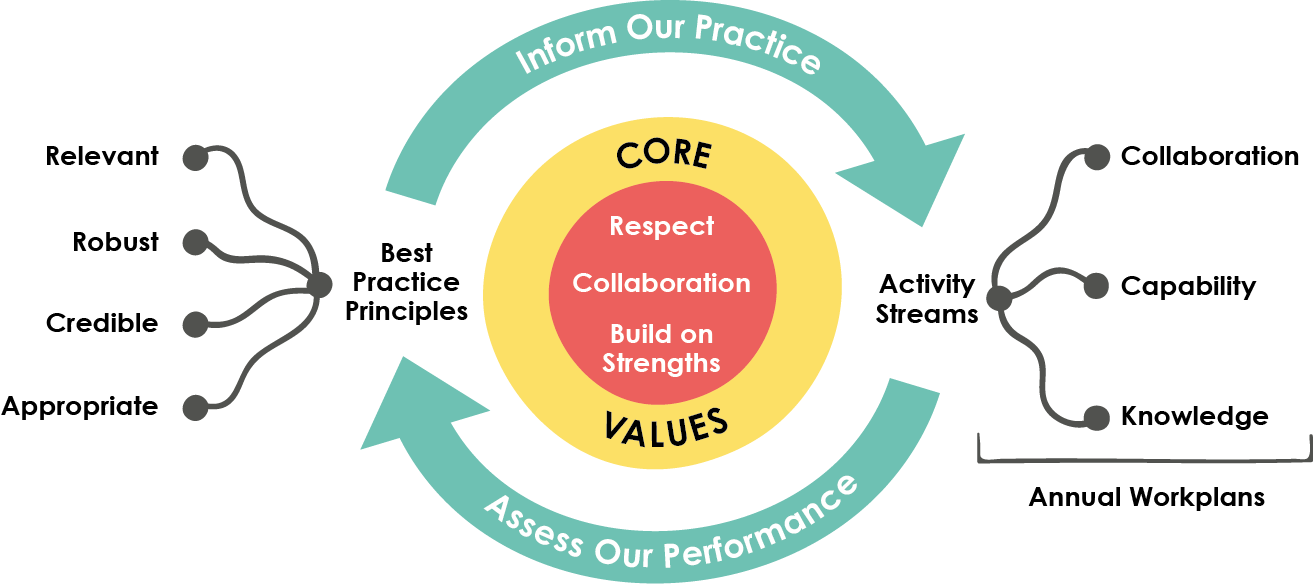

The core values guide central evaluation questions for all evaluations, and are the foundation for the best practice principles and streams of activity of this Framework.

Best practice principles

All evaluations are guided by a set of best practice principles (detailed in Table 1). The principles are grouped under four broad criteria: relevant, robust, credible and appropriate. They provide a benchmark to aspire towards, and are a gauge for assessing the performance of the Framework itself. Reviewing effort under the Framework against the best practice principles creates feedback loops for continual learning and adaptation. The principles also inform the streams of activity: collaboration, capability and knowledge (Figure 2).

Figure 2: Core values and best practice principles guide activities of the Framework

|

CRITERIA |

PRINCIPLES |

|

|---|---|---|

|

Relevant |

Integrated |

Evaluation supports learning, evidence-based decision-making and improvements in service delivery; it is not a compliance activity Evaluation planning is undertaken at the outset when policy and programs are designed Findings from past evaluations inform policy decisions |

|

Respectful |

Collaborative approaches are strengths-based, build partnerships and demonstrate cultural respect towards Indigenous Australians Evaluation integrates diverse Indigenous perspectives with the core values to ensure findings are meaningful, relevant and useful to Indigenous communities and government |

|

|

Robust |

Evidence-based |

Robust evaluation methodologies and analytical methods are used to understand the effects of programs in real-world settings and inform program design and implementation Appropriate data is collected to support evaluation |

|

Impact focused |

Evaluation is focused on examining the impact of Indigenous Advancement Strategy investment Evaluations rigorously test the causal explanations that make programs viable and effective across different community and organisational settings |

|

|

Credible |

Transparent |

Evaluation reports (or summaries) will be made publically available through appropriate, ethical and collaborative consent processes |

|

Independent |

Evaluation governance bodies have some independence from the responsible policy and program areas Evaluators, while working with suppliers, and policy and program areas, will have some independence |

|

|

Ethical |

Ethical practice meets the highest standards for respectful involvement of Indigenous Australians in evaluation |

|

|

Appropriate |

Timely |

Evaluation planning is guided by the timing of critical decisions to ensure specific and sufficient bodies of evidence are available when needed |

|

Fit-for-Purpose |

Evaluation design is appropriate to Indigenous values and considers place, program lifecycle, feasibility, data availability and value for money |

|

|

Evaluation is responsive to place and is appropriate to the Indigenous communities in which programs are implemented The scale of effort and resources devoted to evaluation is proportional to the potential significance, contribution or risk of the program/activity |

||

Relevant: use of evaluation

High quality, systematic evaluation is critical to policy processes and community decision-making. Evidence helps inform policy and program design and supports decision-making by government and communities, to improve outcomes for Indigenous Australians.

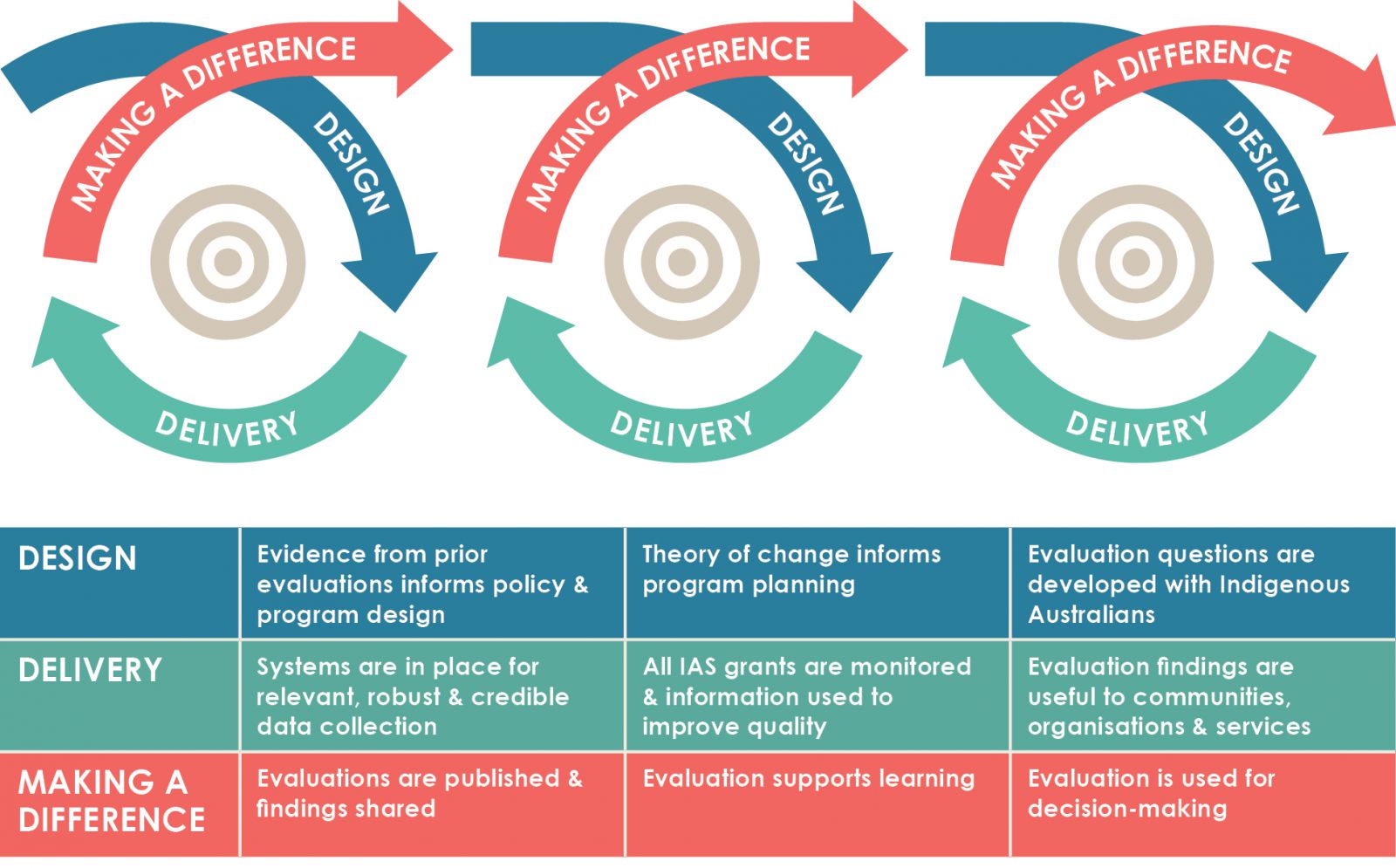

Good evaluation design needs to be planned at the start of policy or program development. A clear theory of change should be developed. Relevant data must be identified. Systems need to be in place to collect and store data. Evaluation can be conducted throughout program delivery to support continuous improvement. Addressing complex, interconnected problems often requires adaptive solutions and interactive feedback cycles. Findings need to be shared throughout the evaluation process to help inform decisions about future policies and programs, growing the body of knowledge about what is helpful, useful and effective in making a difference.

Evaluation needs to be integrated into the feedback cycles of policy, program design and evidence-informed decision-making. Evaluation feedback cycles can provide insights to service providers and communities to enhance the evidence available to support positive change. To increase accountability and learning, this can occur at many points in the cycle (Figure 3).

Evaluation activities under the Framework must be respectful of Indigenous Australians and integrated to generate timely and relevant evidence for decision-making. Respect towards Indigenous Australians that recognises Indigenous values and diversity of perspectives is fundamental. High quality evaluation incorporates Indigenous perspectives in refining knowledge about outcomes and in broader framing of the definitions of program success. Credible evaluations, underpinned by ethical practice, generate high quality evidence that can be used for policy and program design into the future.

Figure 3: Monitoring and evaluation in policy and feedback cycles

Robust: Improving the quality of evidence

High quality, systematic evaluation is critical to the policy process and community decision-making. Evidence helps inform policy and program design and supports decision-making by government and communities, in order to achieve better outcomes for Indigenous Australians.

The Evaluation Framework has an explicit focus on impact evaluation and strategic cross-cutting evaluations. This focus is appropriate to the evaluation needs of the Indigenous Advancement Strategy. Evaluations under the Framework need to be timely and consider outcomes for communities where place-based initiatives are emphasised, as well as the impacts of policies and programs that are implemented Australia-wide. This has similarities to the strategic approach adopted international aid and development effectiveness (DFAT 2016).

Different understandings of impact evaluation exist (Hearn & Buffardi 2016). We purposefully do not narrowly define impact, because evaluations under the Framework must be fit-for-purpose. In practice this means we will not shy away from rigorous approaches to measuring the efficacy of ‘what works’, but that we will not impose a ‘one-size-fits-all’ model of evaluation.

A range of evaluation methodologies can be used to undertake impact evaluation. Evaluations under the Framework will range in scope, scale, and in the kinds of questions they ask. We need to ensure that impact methodology is appropriate for each evaluation. Measuring long-term impact is challenging but important. We need to identify markers of progress that are linked by evidence to the desired outcomes.

The quality of data and evidence can also be improved if the focus of the evaluation is selected in order to provide the best fit with the evaluation purpose. This involves considering options, such as, a whole of program focus, groups of similar activities, or place-based or people-centred evaluation designs.

The transferability of evaluation findings are critical to ensure relevant and useful knowledge is generated under the Framework. High quality impact evaluations use appropriate methods and draw upon a range of data sources both qualitative and quantitative.

Evaluation design should utilise methodologies that produce rigorous evidence, including participatory methods. Use of participatory approaches to evaluation is one example of demonstrating the core values of the Framework in practice.

Credible: Governance and Ethics

The Evaluation Framework is designed to ensure evidence generated by evaluations is credible through demonstrating appropriate levels of independence.

Clear governance is necessary to guide roles and responsibilities within NIAA. These roles include prioritising evaluation effort, ensuring the quality of evaluations, assessing progress in achieving the goals of the Framework, supporting the use of evaluation, and building evaluation capability and capacity.

Roles and responsibilites

Executive Board

The NIAA Executive Board will approve the Annual Evaluation Work Plan and review progress reports.

The Minister for Indigenous Australians will be informed of the Annual Evaluation Work Plan and will receive progress reports against it.

Indigenous Evaluation Committee

This independent external committee will ensure the conduct and prioritisation of evaluations is independent and impartial and support transparency and openness in the implementation of the Framework.

The Committee:

- oversees the implementation of the Framework

- endorses the Annual Evaluation Work Plan, and

- provides ongoing advice, guidance, quality assurance and review.

Policy Analysis and Evaluation Branch

The Evaluation Framework is led by the Policy Analysis and Evaluation Branch in NIAA. This Branch also leads the development of the Annual Evaluation Work Plan, is responsible for strategic evaluations, provides guidance and support for program evaluations, and supports evaluation capability and capacity building.

Ethical evaluations

All evaluations under the Framework are subject to ethical review. Evaluators will continue to seek guidance from relevant ethics committees.

To ensure the highest standards for ethical research are met, evaluation strategies will be informed by the Australian Institute of Aboriginal and Torres Strait Islander Studies’ Code of Ethics for Aboriginal and Torres Strait Islander Research (AIATSIS 2020). This Code of Ethics aims to support the pursuit of high quality evaluation by recommending a process of meaningful engagement and reciprocity with the individuals and/or communities involved in the research. This includes the protection of privacy and assurances of confidentiality ensured through ethics protocols.

The core values of the Evaluation Framework will provide a reference point for the development of evaluation questions and evaluation methodology that is consistent with ethical requirements.

Appropriate: Timely and fit-for-purpose

Evaluation activity under the Framework must be timely and fit-for-purpose. High quality evaluations under the Framework will be selected in order to inform decisions within the policy/program feedback cycle and will adopt appropriate evaluative methodology.

Evaluation efforts will be prioritised, recognising that it is not possible nor desirable to evaluate all activities funded by the Department. Sometimes monitoring is sufficient. Evaluation activities will be published in an Annual Evaluation Work Plan and will reflect feedback cycles in policy and program delivery. The Annual Work Plan will be reviewed and endorsed by the independent Indigenous Evaluation Committee and approved by the NIAA Executive Board.

Prioritising effort

Prioritisation will consider significance, contribution and risk. Significant, high risk programs/activities will be subject to comprehensive independent evaluation. While evaluation priorities will be identified over four years, priority areas remain flexible in order to respond to changing circumstances.

|

Significance |

Size and reach of the program or activity, emphasising what matters to Indigenous Australians as well as policy makers |

|---|---|

|

Contribution |

Strategic need, potential contribution and importance of evidence in relation to the policy cycle |

|

‘Policy risk’ level |

Indicated by gaps in the evidence base, and assessments from past evaluations or reviews of the program or activity |

Responsive design and practice

Evaluation of Indigenous Advancement Strategy programs and activities needs to ensure that Indigenous people, communities and organisations are appropriately involved in evaluation processes. Evaluation should be undertaken in a way that delivers evidence suitable for its intended use, as well as building knowledge for future reference.

In order to meet ethical standards (AIATSIS 2020), evaluation should also be responsive to place and appropriate to the Indigenous communities in which the programs and policies are implemented. Evaluation design needs to be appropriate to the circumstances of Indigenous communities. Choice of methods needs to balance several constraints, and should consider the timing of data collection to fit with the program life-cycle, the feasibility of methods in particular contexts, the availability of existing data, and value for money.

Our commitment to transparency

Assessing progress of the Framework

Improving transparency helps drive continual improvements in evaluation and increases its use (ANAO 2014).

The Indigenous Evaluation Committee will review all high priority evaluations undertaken by Policy Analysis and Evaluation Branch. These reviews will be published with the evaluations.

An annual evaluation report will include a summary of reviews of other Indigenous Advancement Strategy (IAS) evaluations. These reviews will be undertaken by the Policy Analysis and Evaluation Branch with evaluations subject to review selected at random.

At the three year mark an independent meta-review of IAS evaluations will be undertaken to assess the extent to which the Framework has achieved its aims for greater capability, integration and use of robust evaluation evidence against the standards described under each of the best practice principles.

Improving transparency to support the use of evaluations

Sharing evaluations helps inform design, delivery and decision-making. Under the Framework, all evaluation reports or summaries will be made publicly available. In cases where ethical confidentiality concerns or commercial in confidence requirements trigger a restricted release, summaries of the findings will be published in lieu of a full report.

Evaluation findings will be of interest to communities and service providers implementing programs as well as government decision-makers. Evaluation activities under the Framework will be designed to support service providers in gaining feedback about innovative approaches to program implementation and practical strategies for achieving positive outcomes across a range of community settings. In line with ethical evaluation practice, communities who have participated in evaluations will have results provided to support feedback-loops in evaluation use.

Additional approaches to making evaluation findings available will be considered as part of knowledge translation to ensure the ongoing use of evaluation evidence by people, communities and policy makers.

Within NIAA, senior management will provide responses to evaluations following completion, and identified actions will be followed up and recorded.

Building a culture of evaluative thinking

To move towards best practices in evaluation, the Framework will implement three concurrent streams of complementary activities to support continual learning and development covering: collaboration, capability and knowledge (activities are detailed in Table 2).

A key part of building a culture of evaluative thinking through these activities will be dialogue and deliberation about best practice in evaluation to support development of the maturity of evaluation over time.

Collaboration

Recognising the strengths of Aboriginal and Torres Strait Islander communities and cultures, working collaboratively will be an integral part of evaluation activity under the Framework.

Collaboration across different areas of expertise will contribute to solving complex problems.

This means seeking and including the expertise of Indigenous Australians, service providers and academics. The Collaboration stream of the Framework will establish, negotiate and nurture a range of partnerships and engagement processes.

Capability

In order to deliver high quality evaluations under the Framework, effort towards building evaluation capability is critical to enhancing use in policy development. High quality evaluations will assess policies/programs against the core values. Implementation of high quality evaluations must be aligned with the best practice principles.

The Framework is not a ‘how to’ guide to evaluating policies and programs. The Framework promotes a systems approach to building evaluation capability and seeks to build an environment of continuous improvement.

Resource materials will support and encourage NIAA staff, as well as service providers and Aboriginal and Torres Strait Islander communities, to do and use evaluation in line with the core values and principles.

Knowledge

Growing the body of credible knowledge available to policy making processes needs to be based on rigorous evidence. Evaluation design should integrate community values, knowledge and perspectives to ensure findings are useful, credible and helpful (Grey et al. 2016). Knowledge generated by evaluations should be shared, transferable and clearly outline the underlying assumptions of a program and how it is expected to influence changes in outcomes.

Knowledge translation will provide evidence of learning and integration of evaluation towards improvement. This will include generating and sharing transferable knowledge about impacts and interdependencies in policy and program design and implementation. This spans the need for information to support program delivery practice to be useful to place-based approaches; or overarching policy development.

|

STREAM |

ACTIVITIES |

|---|---|

|

Collaboration |

|

|

Capability |

|

|

Knowledge |

|

Next steps

Consultation about this Evaluation Framework shows that there are a range of issues which should be discussed in the development and implementation of the approach to evaluation under the IAS. It will be important to deliberate about these issues through a range of collaboration activities. Review is built into the Framework and ongoing dialogue will support maturation of our approach to evaluation.

Glossary

Criteria of merit – Level of excellence tied to accepted standards.

Cross-cutting – Themes identified in the annual work plans that function as lenses through which evaluation may examine the interconnections across and within activities and systems. These are critical considerations for successful outcomes under the Indigenous Advancement Strategy.

Evaluation logic – High quality, rigorous evaluations establish standards of success to form evaluative judgement, ask clear evaluation questions, systematically collect and analyse the data needed to answer those questions, and reach a meaningful assessment about the merit, worth or significance of a policy or program.

Impact evaluation – Assessment of whether an intervention makes a difference. This term has a range of meanings. Our usage is broad, covering positive and negative, primary and secondary effects that are produced by an intervention over the short, medium and long-term, either directly or indirectly, intended or unintended. The key feature is the study of the net effect, or difference, which can be attributed to the intervention. See www.betterevaluation.org/themes/impact_evaluation for examples of impact evaluation methodologies.

Participatory methods – There are a wide range of participatory approaches which can be used at different stages of evaluation to involve stakeholders, particularly participants, in specific aspects of the evaluation process. Participatory methods include approaches where “representatives of agencies and stakeholders (including beneficiaries) work together in designing, carrying out and interpreting an evaluation” (OECD 2002). For an example in practice, see Sutton et al. (2016).

SMART – Specific: targets a specific area for measurement; Measurable: ensures information can be readily obtained; Attributable: ensures that each measure is linked to the project’s efforts; Realistic: data can be obtained in a timely fashion; Targeted: to the objective population (Gertler et al. 2010, p.27),

Standards – Values inform standards in making evaluative judgements. Evaluation (as opposed to research) makes transparent assessments against referenced values through four steps:

1) establishing criteria of merit 2) setting standards 3) measuring performance, and 4) synthesis or integration to make a judgement of merit or worth (Owen & Rogers 1999).

Theory of Change – A theory of change is “a comprehensive description and illustration of how and why a desired change is expected to happen in a particular context. It is focused in particular on mapping out or “filling in” what has been described as the “missing middle” between what a program or change initiative does (its activities or interventions) and how these lead to desired goals being achieved.” http://www.theoryofchange.org/what-is-theory-of-change/

References

Australian Government Department of Finance 2016, Related rules and guidance to the Public Governance, Performance and Accountability Act 2013 (PGPA Act). http://www.finance.gov.au/resource-management/pgpa-act/

Australian Government Department of Finance 2015, Resource management Guide No. 131: Developing good performance information. https://www.finance.gov.au/sites/default/files/RMG%20131%20Developing%20good%20performance%20information.pdf

Australian Government Department of Foreign Affairs and Trade 2016, Office of Development Effectiveness: Aid Evaluation Policy.

http://dfat.gov.au/aid/how-we-measure-performance/ode/Pages/aid-evaluation-policy.aspx

Australian Institute of Aboriginal and Torres Strait Islander Studies (AIATSIS) 2020, Code of Ethics for Aboriginal and Torres Strait Islander Research AIATSIS, Canberra.

https://aiatsis.gov.au/research/ethical-research/code-ethics

Australian National Audit Office (ANAO) 2014, Better Practice Guide ‘Public Sector Governance: Strengthening Performance Through Good Governance’.

https://www.anao.gov.au/work/better-practice-guide/public-sector-governance-strengthening-performance-through-good

Banks, G 2009, Challenges of Evidence-based Policy Making, Australian Public Service Commission, Canberra.

Chen, H & Garbe, P 2011, Assessing program outcomes from the bottom up approach: An innovative perspective to outcome evaluation. In H Chen, S Donaldson & M Mark (eds), Advancing Validity in Outcome Evaluation: Theory and Practice. New Directions for Evaluation, vol. 130 pp. 93-106.

Gertler, P, Martinez, S, Premand, P, Rawlings, L & Vermeersch, C 2011, Impact evaluation in practice. Washington: World Bank. http://www.worldbank.org/pdt

Grey, K, Putt, J, Baxter, N & Sutton, S 2016, Bridging the gap both-ways: Enhancing evaluation quality and utilisation in a study of remote community safety and wellbeing with Indigenous Australians. Evaluation Journal of Australasia, vol. 16, no. 3 pp. 15-24.

Greene, J 2006, Evaluation, democracy, and social change. In I Shaw, J Greene, & M Mark (eds), Handbook of evaluation: policies, programs and practices, Sage, Thousand Oaks.

Hearn, S & Buffardi, A 2016, What is Impact? A Methods Lab publication. London, Overseas Development Institute.

Kawakami, A, Aton, K, Cram, F, Lai, M & Porima, L 2007, Improving the practice of evaluation through indigenous values and methods: Decolonizing evaluation practice—returning the gaze from Hawaii and Aotearoa.’ In P. Brandon & P. Smith (eds), Fundamental issues in evaluation (pp. 219-242), New York, NY, Guilford Press.

Lovato, C & Hutchinson, K 2017, Evaluation for Health Leaders: Mobile Learning Course. The University of British Colombia, Vancouver. http://evaluationforleaders.org/

OECD 2002, Development Assistance Committee Network on Development Evaluation. Glossary of Key Terms in Evaluation and Results Based Management. OECD, Paris.

Owen, J & Rogers, P 1999, Program Evaluation: forms and approaches (International Edn), Sage, Thousand Oaks.

Peersman, G, Rogers, P, Gujit, I, Hearn, S, Pasanen, T & Buffardi, A 2016, When and How to Develop an Impact-Oriented Monitoring and Evaluation System. A Methods Lab publication. Overseas Development Institute, London.

Rogers, P & Davidson, E 2012, Australian and New Zealand Evaluation Theorists, In (ed) Alkin, M Evaluation Roots: A Wider Perspective of Theorists’ Views and Influences. Sage, Los Angeles.

Sutton, S, Baxter, N & Grey, K 2016, Working both-ways: Using participatory and standardised methodologies with Indigenous Australians in a study of remote community safety and wellbeing. Evaluation Journal of Australasia, vol. 16, no. 4, pp. 30-40.

Weiss, C 1998, Evaluation: methods for studying programs and policies (2nd Edn), Upper Saddle River, NJ, Prentice-Hall.

The Artwork

The artwork by Jordan Lovegrove (Ngarrindjeri) of Dreamtime Creative represents the collaboration between the National Indigenous Australians Agency (NIAA) and the community to evaluate, understand and improve Indigenous Advancement Strategy programs. The large meeting place represents NIAA while the Indigenous Advancement Strategy programs and communities are represented by the smaller meeting places. The pathways connecting the meeting places show NIAA evaluating and working together with the communities. The patterns going outward from NIAA represent the generation and sharing of knowledge.

[1] Recognising Aboriginal and Torres Strait Islander peoples represent diverse cultural identities, this document uses the term Indigenous Australians for consistency with the IAS policy context.